From Block Position to AI-Driven DeFi: Lessons from the IC3 Research Retreat

Last week, I attended the IC3 Winter Retreat in Engelberg, one of the most prestigious and inspiring blockchain research retreats. IC3 brings together PhD students and professors from leading American and European universities, alongside a group of practitioners from organizations such as the Ethereum and Solana Foundations, Flashbots, Chainlink or Fidelity.

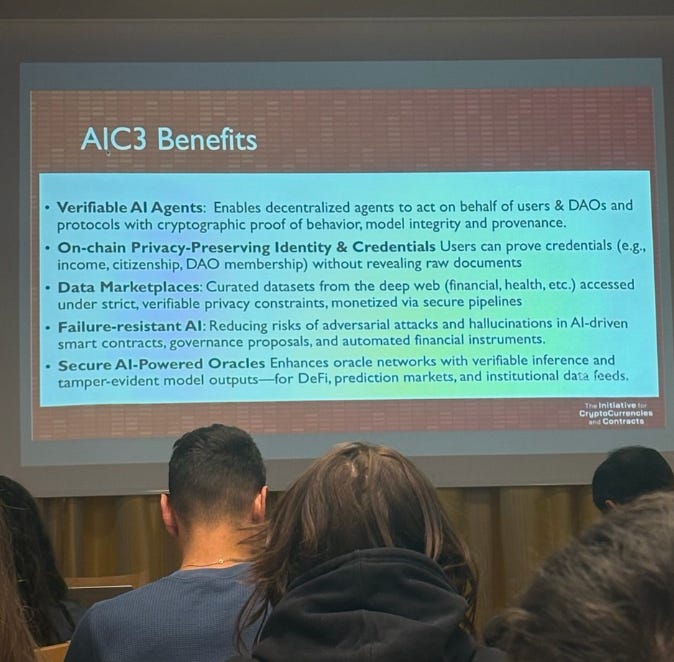

I participated in the retreat for the second time. As always, I was particularly interested in the developments in DeFi, its security and AI. What stood out was how strongly current research is converging around privacy and AI. The group of universities from IC3 is launching an AI research initiative aimed at enhancing research at the intersection of AI and blockchain.

It is becoming clear that progress in DeFi is no longer driven by isolated protocol improvements. It is powered by how effectively these protocols can be coordinated and controlled through the application of AI.

Block Position Has Become Economically Meaningful

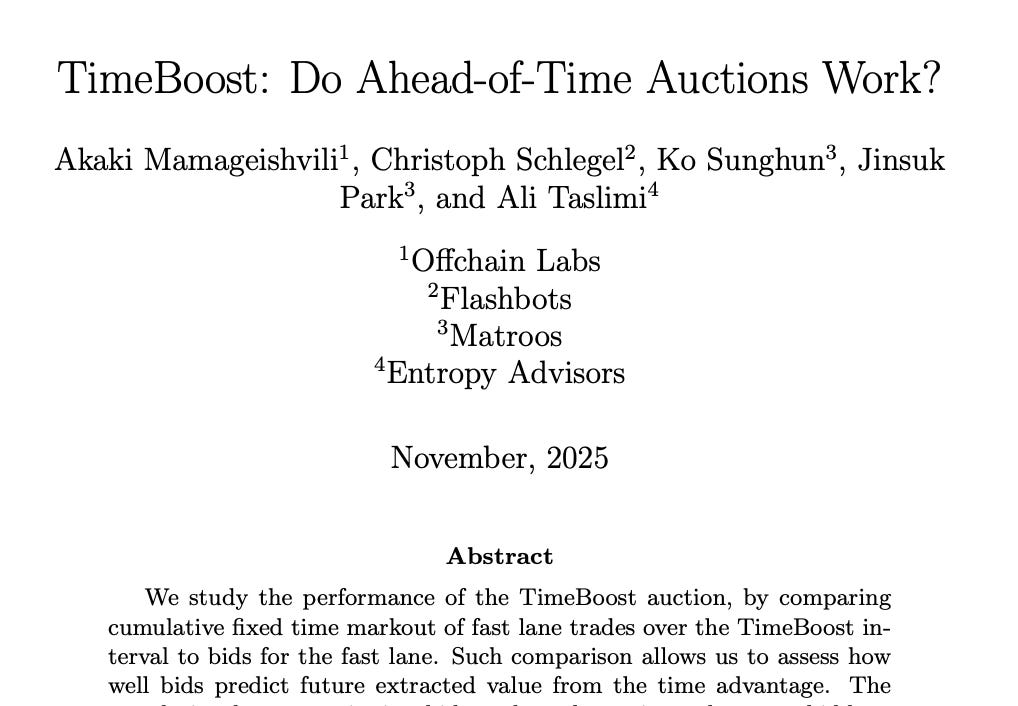

In current blockchain markets, the exact position of a transaction within a block can determine whether an arbitrage succeeds, whether a hedge is effective, or whether a trade is exposed to adverse selection. New layer-2 blockchains such as rollups aim to mitigate the harmful MEV (transaction re-ordering in the block) by introducing fair auction mechanisms.

Users are therefore willing to pay for execution certainty. It is not primarily about speed, but about predictability. From an institutional perspective, this mirrors familiar concerns from traditional markets, where execution quality and slippage dominate raw latency considerations.

What was particularly interesting from a research perspective is that short-horizon pricing of this advantage remains noisy. Even well-designed auctions struggle to accurately predict value minute by minute. Over longer horizons, patterns emerge more clearly. This suggests that participants can identify structural trends, but still struggle to time them precisely. In other words, markets are efficient locally, but remain fragile systemically.

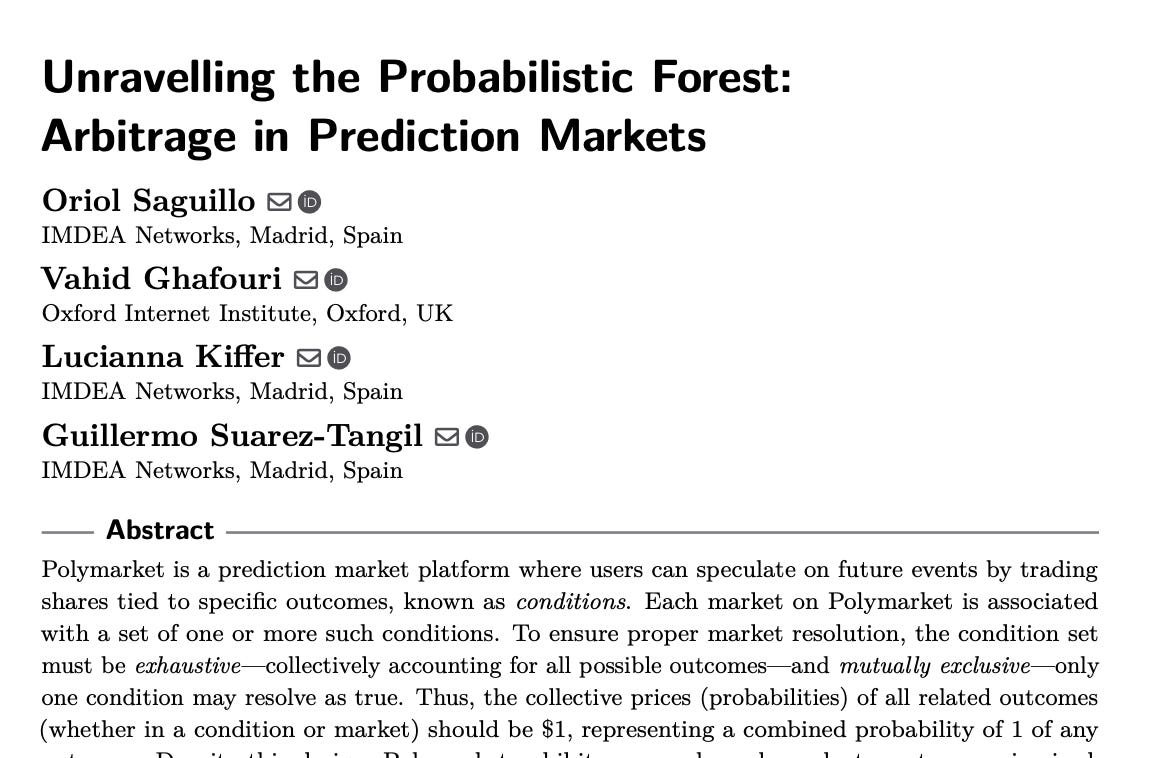

Arbitrage in Prediction Markets

Another fascinating theme was arbitrage across prediction markets, in which blockchain users bet about the outcome of certain events such as presidential elections. Interestingly, inconsistencies regularly arise across related prediction markets, creating opportunities for guaranteed profit.

Whereas arbitrage is expected in maturing markets, an interesting observation is how long and why it persists. As prediction market structures become more complex, identifying dependencies between bets exceeds human capacity. Small differences in phrasing, structure, or timing are enough to create exploitable inconsistencies among various prediction market protocols.

This is a clear example of where automation and LLMs can be practically applied. Detecting these relationships, reasoning about them across markets, and acting consistently in real time requires systems that can operate across venues and abstractions. Human-driven strategies do not scale to this level of complexity.

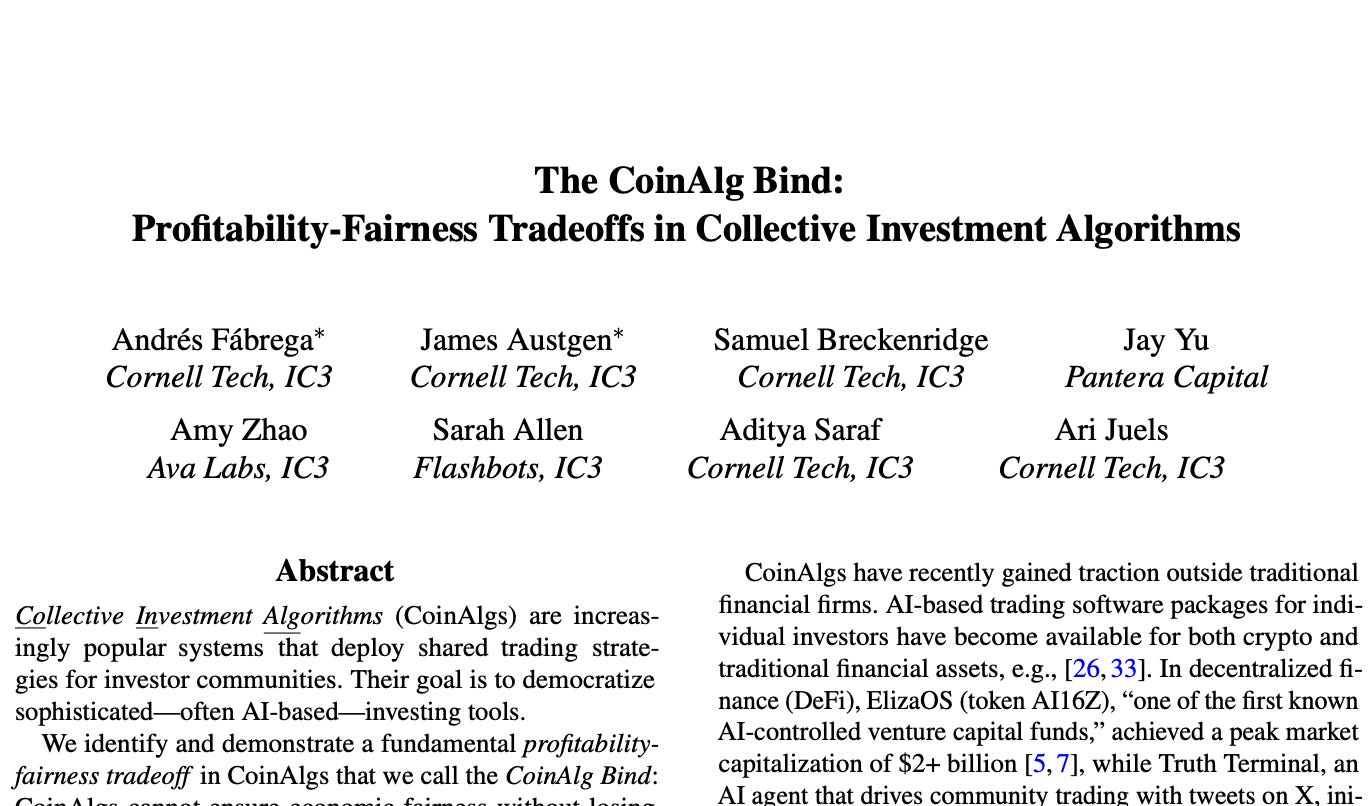

Transparency, Fairness, and CoinAlgs

A related discussion explored collective investment algorithms (CoinAlgs) and the trade-offs between profitability, fairness, and transparency. Fully transparent strategies encoded in a smart contract tend to lose profitability over time as other participants learn and arbitrage against them. More private strategies preserve performance, but introduce concerns around fairness and information asymmetry.

This trade-off is already relevant for vaults, strategy aggregators, and shared investment vehicles. From an institutional standpoint, it introduces a design constraint: governance, disclosure, and performance cannot be treated independently. They must be coordinated deliberately, with a clear understanding of where transparency is required and where it becomes counterproductive.

Liquidation Cascades as a Risk for Institutional Adoption

An industry perspective of Fidelity focused on recent liquidation cascades. In one case, an initial shock triggered liquidations amounting to roughly ten percent of outstanding positions in DeFi lending. These liquidations then amplified price movements, leading to further forced selling.

These events are a predictable outcome of static risk parameters in DeFi lending smart contracts interacting with dynamic markets. Fixed thresholds and slow governance processes struggle to respond to rapid regime changes.

Static Risk Parameters Are Structurally Unsafe at Scale

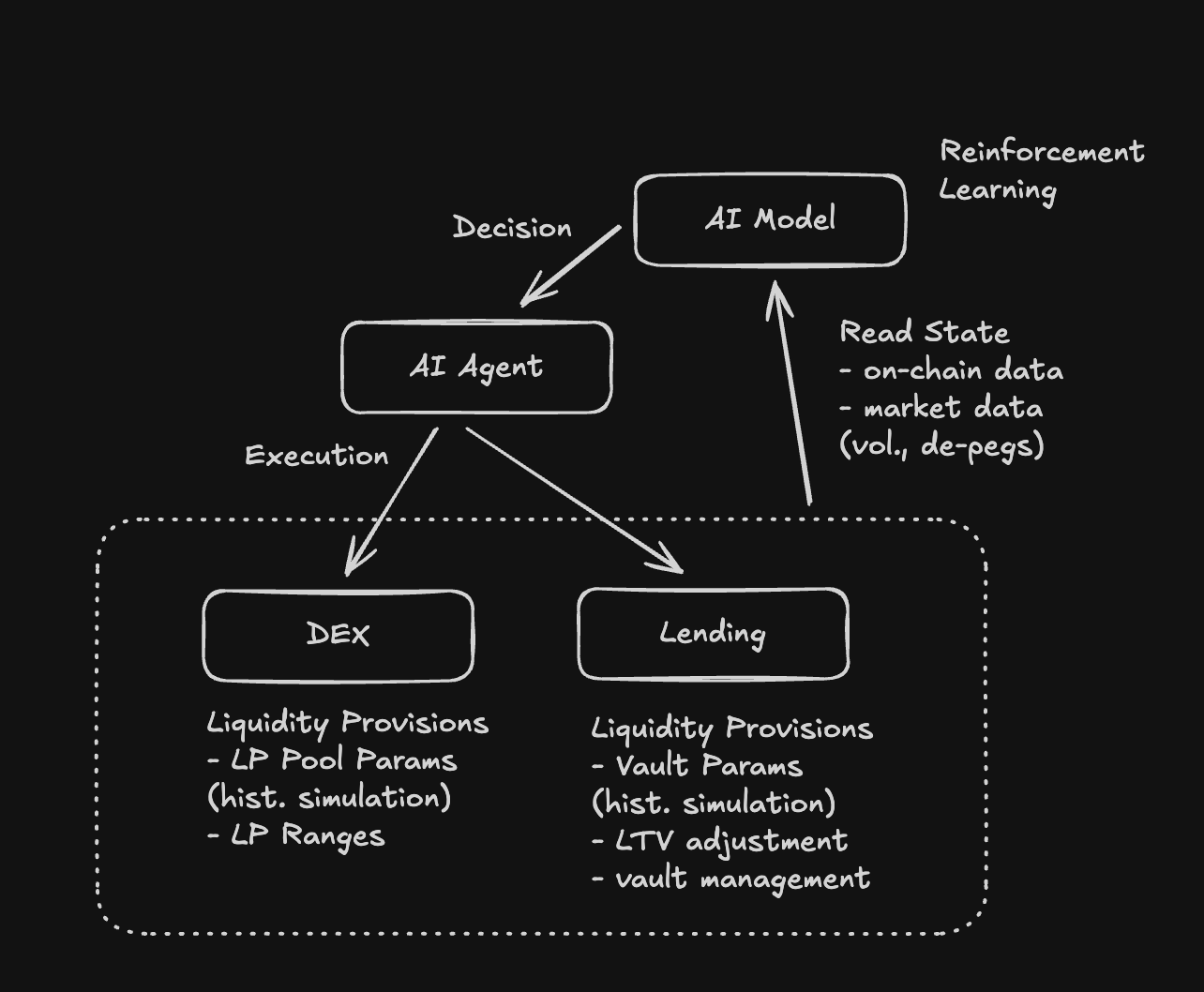

In my view, mitigating these dynamics requires moving beyond rule-based controls toward adaptive AI systems. This includes better market curation, more granular risk segmentation, and automated adjustment of parameters such as interest rates, collateral factors, and liquidation thresholds. AI becomes relevant here not as an analytics tool, but as part of the control loop.

Liquidation cascades and reflexive price dynamics are not anomalies. They are expected outcomes of static rules interacting with dynamic markets.

Institutions should not expect rule-based risk management to scale. Systems that cannot adapt automatically will either be overly conservative or fail under stress. Adaptive control is no longer optional.

In my recent work on reinforcement learning for interest rate adjustment in DeFi lending, we show that learning-based policies can adapt to stress events and shifting market conditions more effectively than static models. The objective is not autonomy for its own sake, but faster and more consistent responses than manual governance can provide.

AI’s Real Role Is Control, Not Prediction

AI Agents and LLMs are mostly used in blockchain for simple automation and execution. However, the new AI tools are to shift from a peripheral tool to a core infrastructure component.

The relevant use of AI in on-chain finance is not alpha generation or dashboards. It is automated parameter adjustment, regime detection, and coordination across markets.

For example in my current topics:

Vaults as standardized capital containers for institutional allocation

An deep-learing coordination layer operating across execution agents, risk, and incentives

Separation between capital ownership, strategy execution (AI Agent), and control logic (deep learning)

The chart below presents how the AI architecture can be designed to operate under stress, not just in a steady state with AI agents executing and deep learning models making the crucial decisions.

What This Means for 2025–2026 DeFi

The common thread across these discussions is maturity. On-chain finance is no longer constrained by whether protocols can be built. It is constrained by whether complex systems can be coordinated reliably.

For institutions, the next phase will not be about isolated yield products. It will be about integrated infrastructure that manages execution quality, risk, and capital allocation coherently across markets.

In my opinion, vaults will remain the primary interface for capital. AI will increasingly function as a shared coordination layer. Defensibility will come from how these components are integrated and operated, not from any single primitive.

Thus, my focus over the next cycle is building and operating these systems with institutional constraints in mind from the start. Research helps define the solution space, but trust is ultimately earned by keeping capital safe when markets move fast.